Home

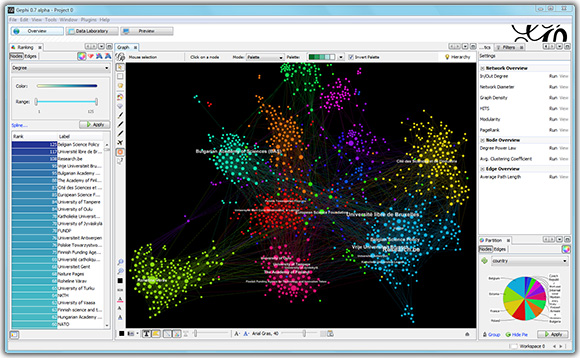

Gephi is an interactive visualization and exploration platform for all kinds of networks and complex systems, dynamic and hierarchical graphs.

Gephi is a tool for people that have to explore and understand graphs. Like Photoshop but for graphs, the user interacts with the representation, manipulate the structures, shapes and colors to reveal hidden properties. The goal is to help data analysts to make hypothesis, intuitively discover patterns, isolate structure singularities or faults during data sourcing. It is a complementary tool to traditional statistics, as visual thinking with interactive interfaces is now recognized to facilitate reasoning. This is a software for Exploratory Data Analysis, a paradigm appeared in the Visual Analytics field of research.

- Developer Handbook

- Build

- Code Style

- Localization

- Datasets

- Import CSV Data

- Import Dynamic Data

- Scripting Plugin

- Quick Start

- Démarrage rapide (FR)

- Layout

- Spatialisations (FR)

- Statistics

- Import

- Spigot importer with Wizard

- Export

- Generator

- Filter

- Extend Data Laboratory

- Preview renderer

- Add a module panel

- Add a submenu

- Build a plugin without Gephi source code

- Update a plugin

- Code Sharing Strategy

- Graph Streaming